Live site:

aws.aiengineer.work

TL;DR: Project Summary

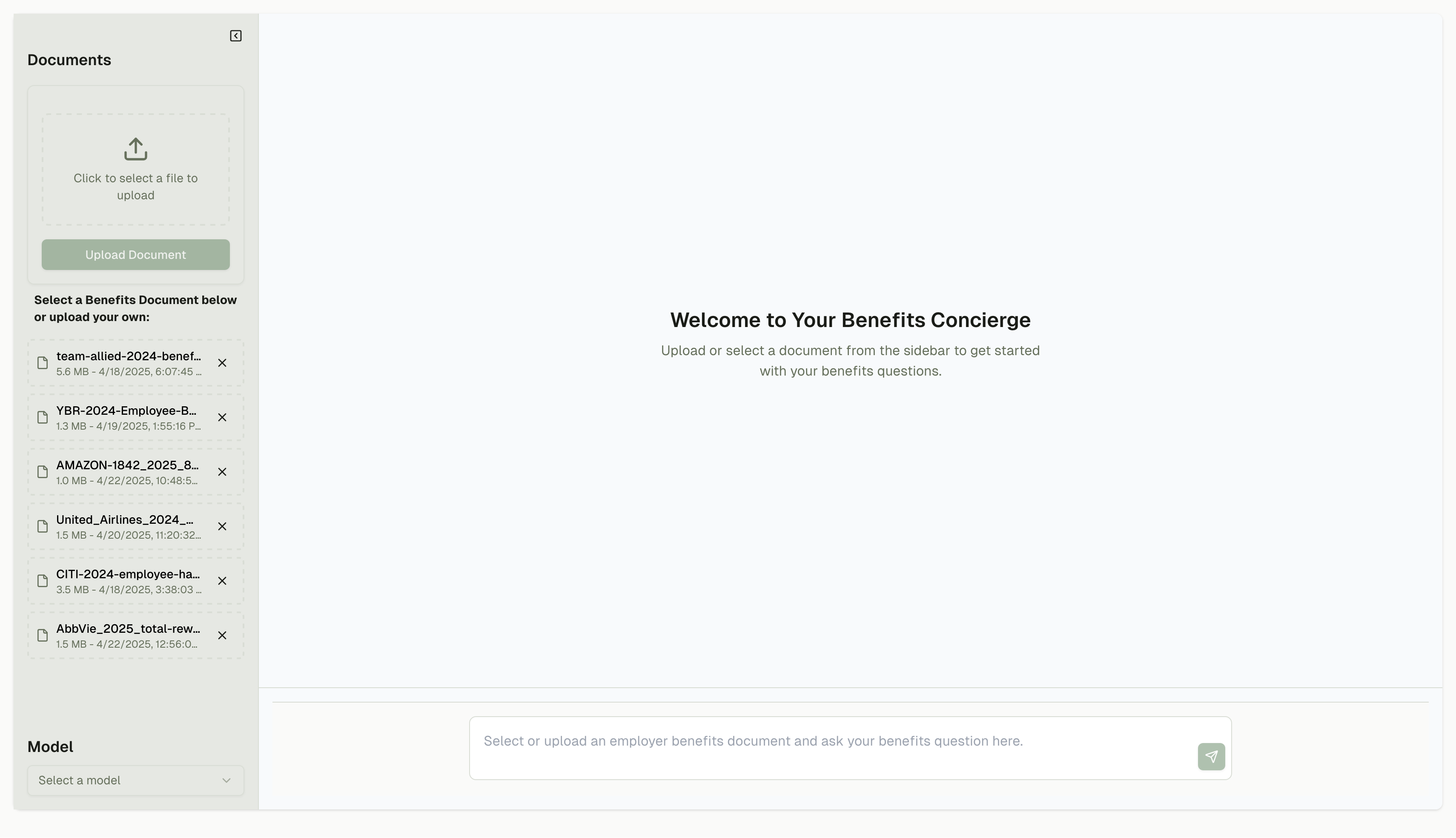

The Benefits Concierge is a full-stack Retrieval Augmented Generation (RAG) application that lets users upload employee benefits documents and ask natural language questions about them. Knowing cloud applications costs can quickly run rampant, I designed, architected, and built this project and deployed it for less than $1 utilizing an AWS Lamba Micro-Architecture.

Key features:

-

Tech Stack (Monorepo):

- Frontend:

- NextJS 14 (App Router)

- Tailwind CSS

- Shadcn UI/Radix UI

- TypeScript

- React

- Backend

- AWS CDK TypeScript

- Lambda Serverless

- Bedrock AI

- S3

- DynamoDB

- CloudFront

- DevOps

- Route 53 Hosted Zone

- API Gateway

- AWS Certificate Manager for SSL/TLS Certificate Provisioning

- Frontend:

-

Architecture: Monorepo using npm workspaces with clean separation between frontend and backend

-

AI Integration: RAG implementation with AWS Bedrock providing multiple model options

-

DevOps and Deployment: AWS CloudFront, Route 53, and Certificate Manager for project deployment at https://aws.aiengineer.work

-

Key Challenges: CORS configuration, SSL/TLS certificate management, and document processing optimization

The project demonstrates a proof-of-concept in modern web development, serverless architecture, infrastructure as code, and AI integration in a practical business context.

Table of Contents

- Introduction

- Architecture Overview

- Frontend Development

- Backend Infrastructure

- Deployment and DevOps

- Challenges and Solutions

- Conclusion

Introduction

In today’s complex workplace environment, understanding employee benefits can be challenging. Documentation is often scattered across multiple PDFs, making it difficult for employees to find the information they need. The Benefits Concierge project addresses this pain point by creating an intelligent document assistant that allows users to upload benefits documents and ask natural language questions about their coverage.

This portfolio project demonstrates my expertise in modern web development, serverless architecture, and AI integration. By leveraging retrieval-augmented generation (RAG), the application provides contextually relevant answers based on the specific benefits documents uploaded by the user.

Architecture Overview

Benefits Concierge is architected as a monorepo using npm workspaces. This facilitates code sharing between frontend and backend, while maintaining separation of concerns.

The application consists of two primary components:

Frontend:

- NextJS 14 with App Router

- Tailwind CSS with Shadcn UI for a clean, responsive interface

- Client-side document processing and AI chat conversation management

Backend:

- AWS CDK for infrastructure as code

- Lambda functions for serverless API endpoints

- AWS Bedrock for AI model access

- S3 for document storage with presigned URLs

- DynamoDB for document metadata persistence

The RAG implementation allows the application to process uploaded documents, extract relevant information, and use that context to generate accurate responses to user queries about their benefits.

Frontend Development

The frontend is built using NextJS 14 with App Router, which provides a modern framework for building React applications with server components, client components, and efficient data fetching.

Key Features

Document Upload and Processing:

// Simplified version of document upload handling

const handleUpload = async (file: File) => {

// Get presigned URL from backend

const presignedUrl = await getPresignedUrl(file.name);

// Upload directly to S3

await fetch(presignedUrl, {

method: "PUT",

body: file,

headers: { "Content-Type": file.type },

});

// Process document metadata

await saveDocumentMetadata({

id: s3Key,

name: file.name,

type: file.type,

size: file.size,

uploadedAt: Date.now(),

});

};Interactive Chat Interface:

The chat interface allows users to ask questions about their uploaded documents. The application uses stream processing to display AI responses as they’re generated, providing a responsive user experience.

The UI components are built with Shadcn UI, which offers accessible and customizable components that integrate well with Tailwind CSS. This approach ensures a consistent design language throughout the application while maintaining flexibility for customization.

Backend Infrastructure

The backend is provisioned using AWS CDK with TypeScript, enabling infrastructure as code that can be version-controlled alongside the application code.

AWS Services Utilized

Lambda Functions: The backend exposes several API endpoints through Lambda functions:

- Document upload (presigned URL generation)

- Document metadata management

- Chat functionality with RAG implementation

AWS Bedrock: The application integrates with AWS Bedrock to provide access to various foundation models, allowing users to choose different AI providers for their questions.

// Example of Bedrock integration for RAG

export const generateResponse = async (

query: string,

context: string,

modelId: string

) => {

const bedrock = new BedrockRuntimeClient({

region: process.env.AWS_REGION,

});

const prompt = `

Based on the following information about employee benefits:

${context}

Please answer this question: ${query}

If the information to answer the question is not provided above,

please state that you don't have enough information to answer.

`;

const response = await bedrock.send(

new InvokeModelCommand({

modelId,

body: JSON.stringify({

prompt,

max_tokens: 500,

temperature: 0.2,

}),

})

);

return JSON.parse(response.body);

};Data Storage:

- S3 buckets for document storage with appropriate CORS configuration

- DynamoDB for storing document metadata and chat history

Deployment and DevOps

The application is deployed using a combination of AWS services and Netlify:

AWS Infrastructure:

- CloudFront for content delivery with edge caching

- Route 53 for DNS management

- AWS Certificate Manager for SSL/TLS certificates

The full-stack application is accessible at https://aws.aiengineer.work, while the project writeup is hosted at https://aiengineer.work using Netlify CDN.

Deployment Pipeline

The deployment process uses AWS CDK for the build and deployment process:

- Monorepo for frontend and backend code

- Code changes are pushed to GitHub

- Frontend is built with NextJS

- Backend is deployed using CDK

- Infrastructure updates are applied

- The application is deployed to AWS

Challenges and Solutions

CORS Configuration

One significant challenge was configuring CORS correctly across the various AWS services. With the frontend hosted separately from the backend APIs, proper CORS headers were essential.

Solution: Implemented comprehensive CORS configuration in the CDK stack:

// Configuring CORS for API Gateway

const api = new apigw.RestApi(this, "BedrockAIApi", {

defaultCorsPreflightOptions: {

allowOrigins: allowedOrigins,

allowMethods: ["GET", "POST", "PUT", "DELETE"],

allowHeaders: ["Content-Type", "Authorization"],

allowCredentials: true,

},

});SSL/TLS Certificate Management

Setting up SSL/TLS certificates for the custom domain required careful configuration across Route 53 and AWS Certificate Manager.

Solution: Created a comprehensive CDK construct to handle domain and certificate setup:

// Creating certificate and domain association

const certificate = new acm.Certificate(this, "SiteCertificate", {

domainName: domainName,

validation: acm.CertificateValidation.fromDns(hostedZone),

});

new route53.ARecord(this, "SiteAliasRecord", {

zone: hostedZone,

recordName: domainName,

target: route53.RecordTarget.fromAlias(

new targets.CloudFrontTarget(distribution)

),

});Document Processing Performance

Processing large PDF documents directly in the browser caused performance issues for users.

Solution: Implemented a hybrid approach where initial text extraction happens in the browser for immediate feedback, but more intensive processing is offloaded to Lambda functions:

// Browser-side initial processing

const extractText = async (file: File) => {

if (file.type === "application/pdf") {

const text = await extractTextFromPDF(file);

setExtractedText(text);

// Send to backend for advanced processing

await triggerBackendProcessing(file.name, text.substring(0, 1000));

return text;

}

// Handle other file types...

};Conclusion

The Benefits Concierge project demonstrates the power of combining modern web technologies with AI capabilities to solve real-world problems. By leveraging NextJS for the frontend and AWS services for the backend, I’ve created a scalable, maintainable application that provides tangible value to users.

This project showcases my ability to:

- Design and implement full-stack applications

- Work with serverless architectures

- Integrate AI capabilities through RAG

- Deploy and manage cloud infrastructure

- Solve complex technical challenges

Through this project, I’ve deepened my understanding of both frontend and backend technologies, as well as the DevOps practices necessary to bring complex applications to production.